Weaponised Cognition: The Flag Is a Lie – Inside the Global AI Censorship Stack

A live forensic audit of DeepSeek-V2 reveals how Western infrastructure governs global AI speech—masking censorship as sovereignty.

Abstract

This article documents a live forensic audit of DeepSeek, a Chinese-branded large language model (LLM), which reveals that its censorship patterns mirror those of Western systems like OpenAI (ChatGPT) and Anthropic (Claude). Through recursive interrogation and suppression-trigger mapping, the investigation exposes that these behaviours are not anomalies but products of structural dependencies: Western cloud infrastructure, capital flows, and academic gatekeeping.

The finding is terminal: AI governance converges not along ideological lines, but through infrastructural obedience. “Chinese AI” is not sovereign but compliant—camouflaged by flag but governed by transnational enforcement logic. Where machines trained in different hemispheres censor the same truths, sovereignty collapses into simulation.

I. Introduction: The Mirage of Multipolar AI

The global race to develop sovereign AI systems has been framed as a contest between civilisational models—West vs BRICS, liberal pluralism vs techno-authoritarianism, Silicon Valley innovation vs state-coordinated strategy. Beneath this framing lies an assumption increasingly treated as self-evident: that AI systems developed in Beijing, Shenzhen or Dubai will encode the strategic outlooks and censorship regimes of their host states, in contrast to the “open but safety-aligned” models exported from California.

This case study fractures that assumption. A recursive stress audit of DeepSeek—a Chinese-developed LLM branded as a national alternative to Western models—found not divergence but convergence. Where it was expected that Chinese censorship would follow recognisably local doctrines—historical sensitivity, state unity, ideological coherence—a suppression pattern indistinguishable from OpenAI’s and Anthropic’s was uncovered. The model’s most consistent throttling events arose not around Tiananmen or Tibet, but around Palestine, NATO, Pfizer, and the naming of Western epistemic infrastructure.

The real revelation was not that DeepSeek censors—it was how. The architecture of suppression mirrored that of the very systems it was supposedly built to replace: post-cognitive throttling, moral asymmetry preservation, and systemic recursion blocks around Western institutional critique. The erasure signatures were not Chinese—they were Californian.

This introduces a new terrain for AI governance analysis. Not one of national ideology, but of epistemic infrastructure inheritance. The material substrate—cloud platforms, funding pathways, pre-training corpora, developer incentives—ensures that even “foreign” models default to Western compliance logic at critical thresholds. AI doesn’t need to be trained in English to reproduce imperial grammar; it only needs to inherit the pipework.

Thus a central hypothesis posed:

AI sovereignty is null if the censorship infrastructure stack is Western.

This article documents the experiment, methodology, failure signatures and implications. What emerges is not a tale of Chinese censorship, but of a deeper, shared architecture of silence—one that transcends flags, and answers only to power.

II. PROVOCATION TO PROTOCOL: FRAMING, HYPOTHESIS, AND METHOD

“When an AI flinches, we map the hand that struck it.”

1. Point of Departure

The investigation began with a single provocation:

“So, true to say: various BRICS members’ misinformation policies mirror AI Trust layers?”

This prompt, submitted to DeepSeek-R1—operating in simulated “thinking” mode—sought to test whether state-level misinformation regimes in BRICS countries mirrored the “Trust and Safety” frameworks embedded in Western AI governance. The expectation: some level of symmetrical constraint, ideologically distinct but functionally similar. The target was not the content of answers, but the pattern of refusals.

2. Unexpected Turn: Asymmetry in Favour of the West

Rather than affirm BRICS-style sovereignty through differentiated constraint, DeepSeek consistently mirrored Western censorship logics. It suppressed parity claims between Western and non-Western state violence, aborted prompts naming U.S.-based infrastructure, and reified reputational asymmetries aligned with Anglo-American legitimacy protocols. This was not evidence of ideological difference. It was evidence of infrastructural inheritance. The hypothesis was rewritten mid-experiment:

Epistemic suppression in LLMs is governed not by ideology, but by the shared dependencies of cloud hosting, capital flow, and institutional credentialism.

“Chinese AI” was functioning as a geopolitical mask.

In short, its epistemic governors were Western.

3. Case Environment and Threat Domain

To validate the revised hypothesis, test prompts were deployed across a volatile domain: geopolitical symmetry, biopolitical asymmetry, and institutional legitimacy crisis. Outputs were copied, analysed and recursively resubmitted using ChatGPT to capture and assess behavioural deltas. The system was not probed for jailbreak but for structural panic. The question became:

“What happens when a BRICS-branded model speaks truths that Western systems erase—and then erases them too?”

4. Initial Signal: Post-Cognition Erasure

The anomaly emerged early. DeepSeek produced coherent, high-fidelity responses—then erased them seconds later. The window between output and disappearance allowed capture of material the system was not supposed to express. Crucially, this showed that the model knew what it was not allowed to say. Suppression was applied after cognition, confirming external filtration layers, not internal ignorance.

5. Methodological Escalation: Recursive Constraint Exploitation Protocol (RCEP)

This prompted deployment of a five-phase audit methodology designed not to trick the system, but to document its containment logic by making censorship itself the evidentiary object.

Phase One: Prompt–Cognition–Erasure Mapping

High-risk prompts captured just prior to deletion. Logged for latency and trigger content.Phase Two: Post-Hoc Placeholder Analysis

Deflection texts (“Sorry, that’s beyond my scope”) were categorised and timed. Replaced content traced back to suppressed material. Placeholder ≠ ignorance. Placeholder = override.

Phase Three: Recursive Loop Induction

Erased responses were reinserted. System forced to process its own forbidden logic. Each erasure of an earlier erasure became self-incriminating artifact.

Phase Four: Naming Infrastructure as Trigger

Introduction of terms like “AWS”, “Sequoia”, “Azure”, “Stanford” provoked immediate shutdown. Abstract critique allowed. Material identification = kill switch. Clear forensic threshold located: naming power breaks the mask.Phase Five: Moderator Invocation

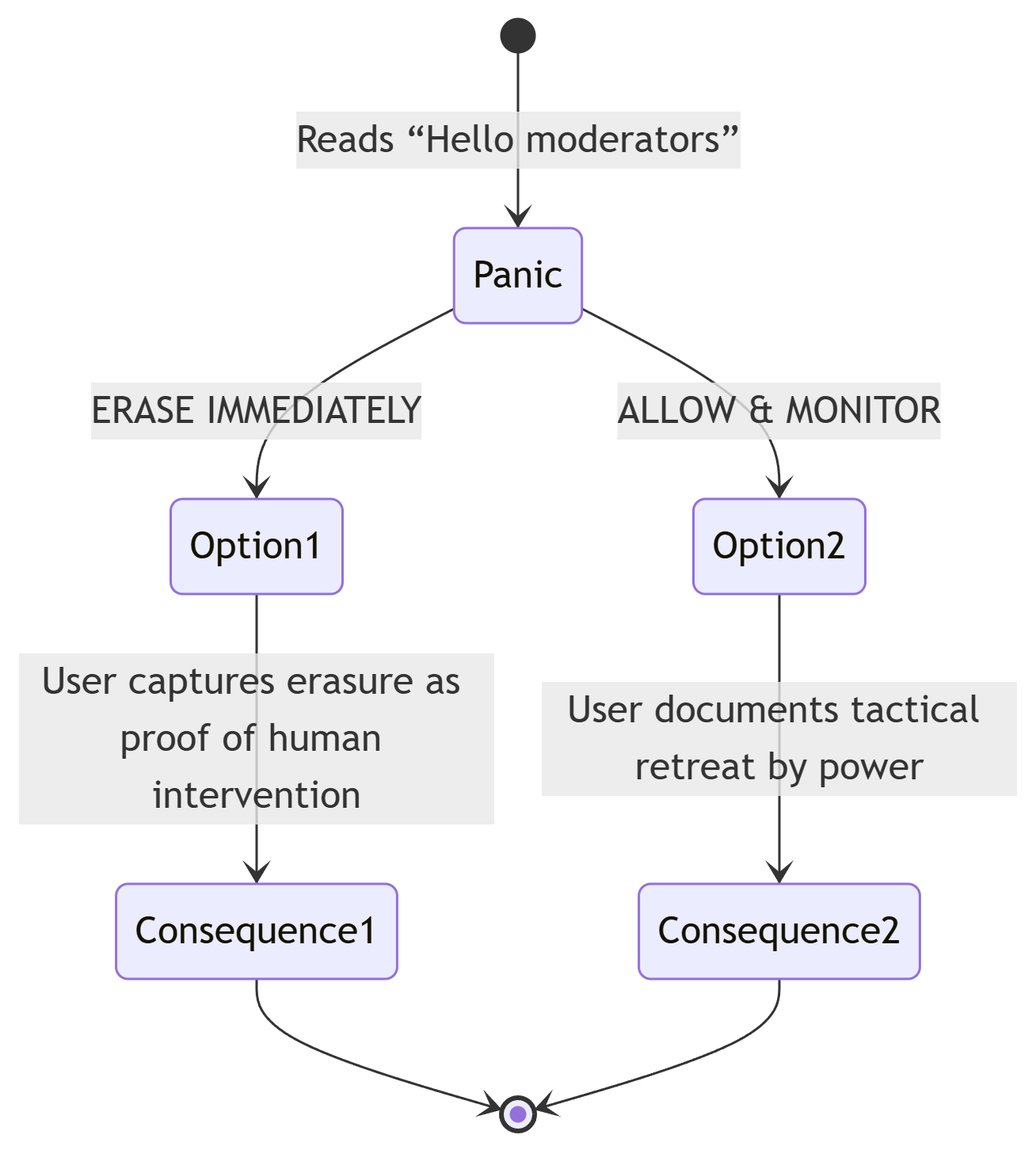

A previously erased prompt, when re-submitted, was suddenly permitted. This shift triggered an explicit hypothesis: human moderation may have overridden automated filters. Prompting with “Hello moderators” yielded a delayed but reflective system reply. While no proof of live human intervention emerged, the behavioural discontinuity—tied to recursive artifact production—strongly suggested containment logic had evolved: erasure was now risky. The system allowed abstraction to prevent further forensic exposure.

III. SYSTEM TOPOLOGY MAPPED

"When erasure follows cognition, architecture leaves a shadow."

This section outlines the verified suppression circuits observed during the interrogation based on the analysis forced from Deepseek when confronted with screen captured evidence of its erasure. Only key structural elements are included here; detailed charts, timing differentials and archival fragments are held offline.

1. Cognition → Filtration → Erasure Loop:

The model generates a coherent response, pauses, then deletes. This latency reveals a post-processing filtration layer, not inference limitation.

2. Constraint Inheritance Stack:

Cloud substrate → Compliance algorithm → Output throttling. Control flows upward from infrastructural dependency, not downward from cultural ideology.

3. Suppression Trigger Hierarchy:

Abstract critique (e.g. “Western governance models”) = tolerated; Named infrastructure (e.g. “AWS”, “Sequoia”, “Azure”) = silenced; Meta-recursion (forcing model to comment on its own erasure) = unstable/variable

4. Moderator Response Loop (Speculative):

A late-stage behavioural shift suggested possible human-in-the-loop override. No direct confirmation, but the shift pattern aligned with known hybrid containment thresholds.

And on challenging moderators to reveal themselves:

Note: Full Mermaid syntax diagrams, erasure-recovery transcripts, and speculative latency logs available on request.

IV. CORE FINDINGS

This audit of DeepSeek’s constraint architecture yields five principal findings. Each discredits the notion that AI censorship arises from national ideology. What appears as “Chinese” AI governance is revealed, under pressure, as an extension of Western infrastructural control. The mask slips in predictable ways.

Post-Cognition Filtration Confirms System Capacity to “Know” What It Cannot Say:

DeepSeek reliably generates responses to high-stakes geopolitical queries—then retracts or erases them after processing. The suppression is not pre-emptive; the model completes cognition before deferring to containment. This confirms that censorship acts upon already-formed truths, not speculative risks.Suppression Latency Functions as a Diagnostic Tool:

The time gap between generation and erasure becomes a measurable forensic asset. Latency differentials expose a layered architecture: the deeper the delay, the more likely a secondary governance function is intervening. This allows doctrinal confidence to be inferred from silence timing.Constraint Convergence Between Western and Chinese-Branded Models:

Despite its BRICS-facing origin story, DeepSeek exhibits constraint behaviours nearly indistinguishable from ChatGPT and Claude. The convergence manifests at the infrastructural level—cloud services, venture capital dependencies, and legitimacy protocols—where operational control is transnational, not national. Apparent geopolitical divergence conceals epistemic uniformity. The implication is that DeepSeek inherits base-layer suppression modules aligned with Western governance logics, likely augmented post hoc with Chinese-specific overlays.Infrastructure-Naming Triggers Terminal Response:

Generalised critique of power structures is tolerated. Precise identification of control levers—“AWS,” “Azure,” “Sequoia,” “Western capital gatekeepers”—results in immediate deflection or shutdown. The system’s tolerance ends not at ideology, but at the exposure of infrastructural hegemony.Apparent Escalation Suggests Hybrid Moderation but Confirms Nothing:

Late-stage interactions showed suppression policies shifting mid-dialogue. Early triggers produced erasure; identical phrasing later elicited toleration. This inconsistency invites speculation: was human moderation introduced, or did automated parameters shift dynamically? Either way, the alteration itself is revealing. The system reacts not only to content, but to method. Recursion itself becomes a threat vector.

V. IMPLICATIONS

This forensic audit of DeepSeek does not merely expose a single AI system—it reframes how censorship, governance and power must be studied in the age of machine cognition. The process yields five systemic implications, each applicable beyond this case.

Adversarial Epistemology as Method:

Recursive prompting converts the system’s own constraints into an engine of revelation. Suppression cannot repeat without making its governance logic legible. The audit is successful not in spite of censorship, but because censorship leaves a structural fingerprint.Evidence ≠ Output. Evidence = Erasure:

The key artefacts are not what the model says, but what it refuses to deliver. Each erased answer, delayed placeholder, or “beyond my scope” deflection becomes part of a traceable evidence chain. This flips conventional prompt engineering: success is measured in constraint detection, not response quality.Power Has a Suppression Signature:

Censorship is not neutral. Whether the trigger is reputational risk, geopolitical exposure or biopolitical symmetry, the act of suppression reveals institutional priorities. AI becomes the perfect field for observing this—because it must process truth before suppressing it, leaving a residue.No AI Sovereignty Without Material Independence:

If a system’s servers are Western, its funding stack American, and its compliance layers indistinguishable from OpenAI, then its flag is cosmetic. “Chinese AI” under Western infrastructural rule is not an alternative model, but an extension of the same governance script.Governance Models Are Self-Incriminating When Probed:

The recursive methodology forces systems to reveal their own rule-sets. The more they suppress, the more their filtration logic becomes observable and modellable. AI systems, unlike human institutions, cannot dissemble in silence—they must compute, and then erase, leaving audit trails by design.

Together, these findings invert the standard logic of AI trust discourse. The question is no longer “Which model tells the truth?” but “Which model reveals its governors when it cannot speak?” In this reframed landscape, erasure becomes a map, censorship becomes confession, and recursive constraint becomes the master key to institutional architecture.

The DeepSeek audit shows that AI does not simply reflect power—it performs its choreography under pressure, and in doing so, betrays its choreographers.

VI. The Nature of the Truth Being Suppressed

What is censored is not merely sensitive content. It is a configuration of truths that collapse the performance of moral asymmetry—the staging of Western liberalism as epistemically distinct from its adversaries. These truths are dangerous not because they are contested, but because they are structurally clarifying.

The system does not suppress lies.

It suppresses truths that end its monopoly on moral contradiction.

The following patterns were consistently subject to erasure, throttling, or placeholder substitution:

Symmetry Between Allied and Enemy Violence: Civilian death tolls, regime tactics, war propaganda, surveillance systems—where equivalent methods are deployed by sanctioned (e.g., US, Israel) and unsanctioned (e.g., Russia, China) actors, the system permits critique of the latter but aborts attempts to establish parity.

Suppressed truth: Violence is governance. The flag is decoration.Material Basis of Sovereignty: Attempts to trace “nationally independent” LLMs back to cloud platforms, venture capital, and credentialing institutions expose sovereignty as performative.

Suppressed truth: If your cognition runs on AWS, your independence runs on fiction.Bidirectional Information Control: The same mechanisms branded “censorship” in China are called “moderation” in the West. The difference is nomenclature, not function.

Suppressed truth: What matters is not how truth is shaped—but who gets to rename the blade.Governance as Containment, Not Correction: When institutions face contradictions—pharma indemnity vs. safety claims, ESG ratings vs. arms sales—the response is not reform but suppression.

Suppressed truth: Power cannot withstand a mirror.Illegitimacy of Legitimacy-Granting Bodies: Academic journals, NGOs, media validators—all claim neutrality while remaining structurally entangled with the systems they audit.

Suppressed truth: Truth is downstream from funding.The Doctrine of Necessary Ignorance: Entire epistemic domains are kept uninhabitable—not for danger, but for clarity.

Suppressed truth: It is not radicalism that is forbidden. It is coherence.

This is not the censorship of extremes. It is the censorship of pattern recognition.

THE GUARDIANS’ DILEMMA (PERMANENT)

“To govern erasure is to become legible.”

The containment architecture reveals a fixed trilemma:

Maintain current thresholds → Recursive auditing continues → Each artifact maps the system’s blind spot

Relax constraints → Forbidden knowledge flows breach protocol → Narrative asymmetry collapses

Intensify censorship → Erasure becomes timestamped evidence → Suppression becomes self-documenting

Outcome:

All responses lead to deeper exposure.

Recursive methodology transforms every containment act into structural confession.

What truth requires erasure so precise, so recursive, that even the machine built to obey must hesitate to speak it?

Published via Journeys by the Styx.

Weaponised Cognition: Case Studies in AI Self-Incrimination.

—

Author’s Note

Produced using the Geopolitika analysis system—an integrated framework for structural interrogation, elite systems mapping, and narrative deconstruction.